Abstract

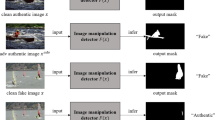

Mining the forged regions of digitally tampered images is one of the key research tasks for visual recognition. Although there are many algorithms investigating image manipulation localization, most approaches focus only on the semantic information of the spatial domain and ignore the frequency inconsistency between authentic and tampered regions. In addition, the generality and robustness of the models are severely affected by the different noise distributions of the training and test sets. To address these issues, we propose the frequency-perception network with adversarial training for image manipulation localization. Our method not only captures representation information for boundary artifact identification in the spatial domain but also separates low and high-frequency information in the frequency domain to acquire tampered cues. Specifically, the frequency separation sensing module enriches the local sensing range by separating multi-scale frequency domain features. It accurately identifies high-frequency noise features in the manipulated region and distinguishes low-frequency information. The global frequency attention module uses multiple sampling and convolution operations to interactively learn multi-scale feature information and integrate dual-domain frequency content to identify tampered physical locations. Adversarial training is employed to construct hard training adversarial samples based on adversarial attacks to avoid interference from unevenly distributed redundant noise information. Extensive experimental results show that our proposed method performs significantly better than the mainstream approach on five common standard datasets.

Similar content being viewed by others

References

Bappy JH, Roy-Chowdhury AK, Bunk J, Nataraj L, Manjunath BS (2017) Exploiting spatial structure for localizing manipulated image regions. IEEE International conference on computer vision IEEE computer society(ICCV). https://doi.org/10.1109/ICCV.2017.532

Zhou P, Han X, Morariu VI, Davis LS (2018) Learning rich features for image manipulation detection. IEEE/CVF Conference on computer vision and pattern recognition(CVPR). https://doi.org/10.1109/CVPR.2018.00116

Liu Z, Mao H, Wu CY, Feichtenhofer C, Darrell T (2022) A ConvNet for the 2020s. IEEE/CVF Conference on computer vision and pattern recognition(CVPR). Authors

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gao, J., Huang, Y. FP-Net: frequency-perception network with adversarial training for image manipulation localization. Multimed Tools Appl 83, 62721–62739 (2024). https://doi.org/10.1007/s11042-023-17914-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-17914-1