Abstract

Over the past 10 years, machine vision (MV) algorithms for image analysis have been develo** rapidly with computing power. At the same time, histopathological slices can be stored as digital images. Therefore, MV algorithms can provide diagnostic references to doctors. In particular, the continuous improvement of deep learning algorithms has further improved the accuracy of MV in disease detection and diagnosis. This paper reviews the application of image processing techniques based on MV in lymphoma histopathological images in recent years, including segmentation, classification and detection. Finally, the current methods are analyzed, some potential methods are proposed, and further prospects are made.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Basic clinical information about lymph

Malignant lymphoma is a tumor that originates in the lymph nodes and extranodal lymphoid tissues, accounting for 3.37% of all malignant tumors in the world (International Agency for Research on Cancer, http://gco.iarc.fr/). Annually, approximately 280,000 individuals worldwide receive a diagnosis of lymphoid malignancies, which comprise at least 38 different subtypes according to the World Health Organization (WHO) classification (Rubinstein et al. 2022). Pathologists rely on histopathological examination of tissue sections at various magnification levels for the diagnosis and classification of lymphoma. The traditional lymphoma diagnosis relies on performing a surgical biopsy of suspicious lesions, followed by applying hematoxylin and eosin (H &E) staining. This staining technique allows observing abnormal cells’ growth patterns and morphological characteristics. The morphological analysis conducted by pathologists, which includes assessing tissue structure, cell shape, and staining characteristics, remains an essential criterion for the routine histopathological staging and grading of lymph nodes (Isabelle et al. 2010; Pannu et al. 2000).

To make the above content more intuitive, we can observe the acquisition process of the histopathological image in Fig. 1. First, a biological sample is taken from a living body. Then, the biopsy is fixed to avoid chemical changes in the tissue (Hewitson and Darby 2010). After that, the tissue is cut into sections to be placed on a glass slide for staining. Then the stained tissue is covered on the slide with a thin piece of plastic or glass, which can protect the tissue and facilitate observation under a microscope. Finally, the slide is observed and digitized with a microscope.

The process of histopathology image acquisition. a–f are taken from http://library.med.utah.edu/WebPath/HISTHTML/HISTOTCH/HISTOTCH.html. g corresponds to Fig. 1(a) in Holten-Rossing (2018))

In this paragraph, we introduce the morphological features of some typical lymphomas. Because every type of lymphoma has a characteristic morphology, many lymphomas can be accurately subcategorized based on morphological characteristics (Xu et al. 2002). In NHL, follicular lymphoma (FL) is the standard type of B-cell lymphoma (as shown in Fig. 2a). FL cells usually show a distinct follicular-like growth pattern, with follicles similar in size and shape, with unclear boundaries. DLBCL is a diffusely hyperplastic large B cell malignant tumor. The cell morphology of DLBCL is diverse. The nucleus is round or oval, with single or multiple nucleoli (as shown in Fig. 2b). In HL, NLPHL presents a vaguely large nodular shape, and the background structure is an extensive spherical network composed of follicular dendritic cells (as shown in Fig. 2c).

1.2 The development of machine vision in the diagnosis and treatment of lymphoma

Many diseases can be evaluated and diagnosed by analyzing cells and tissues. Now, Computer-aided Diagnosis (CAD) systems become a significant research subject (Bergeron 2017). A CAD system generally consists of three parts (Samsi 2012). First, CAD systems segment the object from the background tissue. Second, CAD systems extract features related to the classification. Finally, a diagnosis result based on the extracted features is obtained from a classifier. In recent years, CAD has become a significant medical image analysis research direction. Usually, the medical images that require computer assistance are histopathological slides. Compared with pathologists, the analysis of diseases by CAD systems is performed quickly, and usually, only one sample image is needed to get accurate results (Di Ruberto et al. 2015). With CAD systems, pathological images can be automatically classified. This can enable doctors to be more efficient in diagnosing diseases, and the diagnosis results can be more accurate and objective (Zhang and Metaxas 2016; Zhang et al. 2015). Today, CAD is used in many fields of medicine. For example, CAD systems play a critical role in the early detection of breast cancer (Rangayyan et al. 2007), lung cancer diagnosis (Reeves and Kostis 2000), and arrhythmia detection (Oweis and Hijazi 2006). In Kourou et al. (2015), a CAD system is mainly used in cancer diagnosis and prognosis. Kong et al. (2008) describe a CAD system that can classify stromal development and neuroblastoma grades. A CAD system is composed of image preprocessing, segmentation, feature extraction, feature reduction, detection and classification, and post-processing modules.

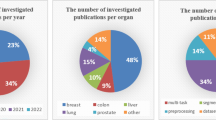

In CAD systems, machine vision (MV) is an important research direction. For example, in Lee et al. (2018), a CAD system based on deep learning is developed to locate and differentiate metastatic lymph nodes of thyroid cancer using ultrasound images, a thriving example of MV as a high-sensitivity screening tool. At present, MV has been used in agriculture (Patrício and Rieder 2018), medicine (Obermeyer and Emanuel 2016), military fields (Budiharto et al. 2020) and various industrial applications. As shown in Fig. 3, the applications of MV in the diagnosis and treatment of lymphoma mainly include segmentation, classification, and detection. Besides, the number of applications and tasks has increased, such as quantitative analysis (Shi et al. 2016), analysis of characteristics of lymphoma (Martins et al. 2019), and so on.

However, CAD has the following disadvantages. First, CAD requires a large-scale dataset to train the neural network or machine learning algorithm. However, medical images are challenging to acquire, and the data sets of medical images are quite small, which makes CAD poor for recognition results. In addition, since staining techniques vary among pathologists, there are large variations in the data from different batches, making the training process for CAD more difficult. Finally, since current image classification models require training and inference on large computing devices, the deployment of CAD at terminals is currently one of the current research difficulties (Prakash et al. 2023). The diagnosis and classification of lymphoma are crucial in clinical treatment and prognosis. Accurate diagnosis heavily relies on the expertise of pathologists. When dealing with follicular hyperplasia, it is important for pathologists to accurately differentiate between follicular lymphoma (FL) and follicular hyperplasia (FH), as these lesions can sometimes have very similar characteristics. Histopathological screening of different lymphoma types often poses challenges for pathologists, primarily due to the subtle variations in histological appearance and the complexities involved in visually differentiating between various types of lymphomas. This process is prone to inconsistencies and variations in interpretation between different observers and laboratories. Studies have indicated that there is a 20% variation in lymphoma diagnoses by pathologists (Pathologique 2017; Bowen et al. 2014; Matasar et al. 2012).

Therefore, this review addresses the following problems. First, it focuses on the urgency of quickly identifying the problems and methods that need to be solved for lymphoma histopathology images. Second, it aims to assist pathologists in accurately identifying the focal areas of lymphatic histopathology images.

1.3 Motivation of this review

This paper comprehensively reviews the MV methods for lymphatic histopathology image analysis. The motivation of this study is to study the popular technologies and trends of MV and to explore the future potential of MV in the diagnosis and treatment of lymphoma. In the course of our work, we found that some survey papers are related to the applications of MV in this area, and the comparison of the number of lymphatic-related papers and total papers in other reviews is shown in Table 1.

From Table 1, we can find the following drawbacks. First, some survey papers only focus on cancer diagnosis and detection by medical image analysis, with a lack of description of detailed methods (Loukas and Linney 2004; Cai et al. 2008; Gurcan et al. 2009; He et al. 2012; Kumar et al. 2013; Irshad et al. 2013; Arevalo et al. 2014; Madabhushi and Lee 2016; Jothi and Rajam 2017; Litjens et al. 2017; Ker et al. 2017; Robertson et al. 2018; Bera et al. 2019; Zhou et al. 2020). Second, some survey papers review medical images for a single method, without presenting all MV methods (Cruz and Wishart 2006; Belsare and Mushrif 2012; Greenspan et al. 2016). Finally, none of the survey papers contributed to a comprehensive summary of the applications of MV in LHIA. In order to address the above-mentioned drawbacks, we investigate from the lymphatic histopathology image dataset, MV methods and LHIA tasks. Therefore, we refer to the methodology of other field reviews (Jahanbakhshi et al. 2021a, b, c), this study summarizes more than 170 works from 1999 to 2020 to fill the gap of MV in this field. The works summarized in this paper are scoured from ar**v, IEEE, Springer, Elsevier, and so on. The screening process of the whole work is shown in Fig. 4. Twenty-six keywords are used to search for papers we are interested in, and a total of 2175 papers are collected. After two rounds of screening, we retain 171 papers, including 135 papers on lymphoma histopathology image analysis and 36 survey papers.

This study contains the following innovations and motivations compared to previous studies. First, in contrast to previous studies which tend to focus on medical images of different diseases, this study focuses on lymph histopathology image analysis and the comparison result is shown in Table 1. In addition, different from previous studies that focused on a single task in lymph histopathology image analysis for review, this study provides a more complete and detailed analysis of all processes and tasks in lymph histopathology images. Furthermore, this study provides a more detailed summary of the advantages and disadvantages of the new technique compared to previous studies. Finally, this study also gives predictions for the future direction of the technology.

1.4 Structure of this review

The content introduced in each section of this paper is shown in Fig. 5 as follows: Sect. 2 introduces commonly used datasets and evaluation methods. Section 3 introduces commonly used preprocessing methods, and Sect. 4 introduces commonly used image segmentation methods. Sections 5, 6 and 7 introduce the use of CAD technology for feature extraction, classification, and detection methods, and finally Sect. 8 summarizes this article and made further prospects for future work.

2 Datasets and evaluation methods

In the applications of MV in analysing histopathological images of lymphoma, we have discussed some publicly available datasets that are frequently used. Besides, we listed evaluation metrics used in segmentation, classification, and detection tasks.

2.1 Publicly available datasets

Five publicly available datasets are discussed in this section; among them, four of them are Camelyon16 (Camelyon16 2016), Camelyon17 (Camelyon16 2016), IICUB-2008 (Shamir et al. 2008) and DLBCL-Morph (Vrabac et al. 2021) and the 5th dataset is collected from the National Cancer Institute (http://www.cancer.gov/) and National Institute on Aging (NIA) (https://www.nia.nih.gov/). Table 2 shows the basic information of the datasets.

The Camelyon16 challenge is organized by International Symposium on Biomedical Imaging (ISBI) in 2016. The purpose of Camelyon16 is to advance the algorithms for automated detection of cancer metastases in H &E stained lymph node images. This competition has a high clinical significance in reducing the workload of pathologists and the subjectivity in the diagnosis. The data used in Camelyon16 consists of 400 whole-slide images (WSIs) of SLN, which are collected in Radboud University Medical Center (Radboudumc) (Nijmegen, The Netherlands), and the University Medical Center Utrecht (Utrecht, the Netherlands). The training dataset includes 270 WSIs, and the test dataset includes 130 WSIs. In Fig. 6a–c are WSIs at low, medium and high resolution respectively (Camelyon16 2016).

The Camelyon17 is the second challenge launched by the Diagnostic Image Analysis Group (DIAG) and Department of Pathology in Radboudumc. The purpose of Camelyon17 is to advance the algorithms for automated detection and classification of cancer metastases in H &E stained lymph node images. Like Camelyon 16, Camalyon 17 also has a high clinical significance. The training dataset includes 500 WSIs, and the test dataset includes 500 WSIs (as shown in Fig. 6).

The IICBU-2008 dataset is proposed by Shamir et al. (2008) in 2008 to provide free access of biological image datasets for computer experts, it includes nine small datasets containing different organs or cells. Three types of malignant lymphoma are proposed in the dataset containing lymphoma. There are 113 CLL images, 139 FL images and 122 MCL images (as shown in Fig. 7). The images are stained with H &E and usually used for classification such as Meng et al. (2010) and Bai et al. (2019).

The dataset used in Roberto et al. (2017) and Ribeiro et al. (2018) is from the NCI and NIA, contains 173 histological NHL images which are comprised of 12 CLL images, 62 FL images and 99 MCL images (as shown in Fig. 8).

In DLBCL-Morph, a total of 209 HE-stained patches of diffuse large B-cell lymphoma are included, and Ground Truth regions are annotated for each image.

2.2 Evaluation method

This section introduces some commonly used evaluation metrics in the classification, segmentation, and detection tasks of lymphoma histopathology images.

2.2.1 Some basic metrics

Here, we presented several commonly used evaluation metrics. Suppose we want to classify two types of samples, recorded as positive and negative, respectively: True positive (TP), False positive (FP), False negative (FN) and True negative (TN). The value in the diagonal of the confusion matrix is the number of correct classifications for each category.

2.2.2 Evaluation criteria for classification models

In classification tasks, when a classifier is trained with enough training samples, the next step is to provide test samples to check whether the test samples are correctly classified. Then, a method to evaluate and quantify the results of this classification is necessary. Accuracy (ACC), Precision, Sensitivity (SEN), Recall, F1-score, Receiver Operating Characteristic Curve (ROC curve) and Area Under Curve (AUC) are always used to evaluate classification performance. In addition, some metrics such as positive predictive value (PPV) and negative predictive value (NPV) are also used in Bollschweiler et al. (2004). PPV represents the proportion of true positive samples among all samples classified as positive and is given as TP/(TP+FP). NPV represents the proportion of true negative samples among all samples classified as negative and is given as TN/(TN+FN). Finally, SEN and SPE are also called true positive rates (TPR) and true negative rates (TNR). Table 3 shows the commonly used evaluation metrics in classification tasks.

2.2.3 Evaluation criteria for segmentation methods

Segmentation can be used to detect the region of interest (ROI) in histopathological images. In tasks of histopathology image analysis, ROIs include tissue components such as lymphocytes and cell nuclei. Segmentation is the process of dividing ROIs from the tissue. Since segmentation is generally used to prepare for subsequent detection and classification, so it is necessary to evaluate the segmentation result by appropriate metrics.

The segmentation metrics commonly used in segmentation include Dice coefficient (DICE), Hausdorff distance (HD), mean absolute distance (MAD) (Cheng et al. 2010), Jaccard index (JAC) (Fatakdawala et al. 2010) and so on. The following are the formulas for the above metrics. \(S_g\) is a set of points (\(g_1, g_2,..., g_n\)) constituting the automatically segmented contour, \(S_t\) is a set of points (\(t_1, t_2,..., t_n\)) constituting the ground truth contour.

-

(1)

DICE represents the ratio of the area where the two regions intersect to the total area and is given by

$$\begin{aligned} \text{DICE}=\frac{2 \vert S_g \cap S_t \vert }{\vert S_g \vert + \vert S_t \vert } \end{aligned}$$(1) -

(2)

HD between \(S_g\) and \(S_t\) is the maximum among the minimum distance computed from each point of \(S_g\) to each point of \(S_t\). HD is defined by

$$\begin{aligned} \text{HD}= & {} \text{max}(h(S_g, S_t), h(S_t, S_g)) \end{aligned}$$(2)$$\begin{aligned} h(S_g, S_t)= & {} \mathop {\text{max}}_{g \in S_g}\mathop {\text{max}}_{t \in S_t}\parallel g-t \parallel \end{aligned}$$(3) -

(3)

MAD between \(S_g\) and \(S_t\) is the mean of the minimum distance computed from each point of \(S_g\) to each point of \(S_t\). MAD is defined by

$$\begin{aligned} \text{MAD}=\frac{1}{n}\mathrm{\Sigma } _{g=1}^{n}\lbrace \text{min}_{t \in S_t} \lbrace h(g,t)\rbrace \rbrace \end{aligned}$$(4) -

4)

JAC represents the intersection of two regions divided by their union and is defined by

$$\begin{aligned} \text{JAC}=\frac{\vert S_g \cap S_t \vert }{\vert S_g \cup S_t \vert } \end{aligned}$$(5)

2.2.4 Evaluation criteria for detection methods

Because most of the detection tasks are completed by classification, many metrics in the classification tasks can also be used to evaluate the results of the detection tasks. Such as ACC, Confusion matrix, Precision, Recall, ROC curve, AUC, SEN, SPE in Sect. 2.2.2. In addition, free response operating characteristic (FROC) is used to evaluate the result in Lin et al. (2018), dice similarity coefficient (DSC) (as shown in Eq. 6) is used to evaluate the result in Senaras et al. (2019) and AI (Artificial Intelligence) score is used to evaluate the probability of lymph node metastasis in Harmon et al. (2020).

2.3 Summary

In summary, we introduce the datasets commonly used in tasks of LHIA, among which the commonly used data sets are Camelyon16, Camelyon17 and IICBU-2008. Next, we summarize the evaluation metrics commonly used in classification, segmentation and detection tasks. We find that the commonly used metrics in classification and detection tasks are ACC, SEN, SPE, AUC. DICE and HD are the commonly used metrics in segmentation tasks.

3 Image preprocessing

In order to obtain the expected results in tasks of LHIA, the images must be in good quality. In this section, we introduce the preprocessing methods commonly used in tasks of LHIA, which are based on color, filter, threshold, morphology, histogram and other methods.

3.1 Color-based preprocessing techniques

At present, some CAD methods in tasks of LHIA convert images from RGB color space to other color spaces. The commonly used color-based preprocessing methods on LHIA as shown in Table 4.

In Angulo et al. (2006), Sertel et al. (2008, 2009), Basavanhally et al. (2009), Belkacem-Boussaid et al. (2009, 2010a, b, c), Orlov et al. (2010), Akakin and Gurcan (2012), Oztan et al. (2012), Saxena et al. (2013), Di Ruberto et al. (2015), Tosta et al. (2017a), Zhu et al. (2019), Bai et al. (2019) and Martins et al. (2019, 2021), \(L^*a^*b^*\) color space is used on LHIA for \(L^*a^*b^*\) allowing color changes to be compatible with differences in visual perception. In Chen et al. (2005); Sertel et al. (2010b); Di Ruberto et al. (2015); Tosta et al. (2017a), \(L^*u^*v^*\) color space is used on LHIA for \(L^*u^*v^*\) color space are uniform in perception. In Basavanhally et al. (2008), Sertel et al. (2008a), Samsi et al. (2010, 2012), Belkacem-Boussaid et al. (2010b), Han et al. (2010), Akakin and Gurcan (2012), Ishikawa et al. (2014), Kuo et al. (2014), Zarella et al. (2015), Fauzi et al. (2015), Di Ruberto et al. (2015), Wang et al. (2020), the patches are rotated by 0 and 90° after crop**. In Hashimoto et al. (2020), the patches are rotated to augment data when the number of patches in a WSI is less than 3000.

3.7 Summary

The advantages and limitations of the above image preprocessing techniques are as follows. Color-based preprocessing techniques can effectively quantify color characteristics by adjusting the color space, color equalization and color enhancement. However, adjusting to other color spaces also has color distortion leading to poor results in subsequent tasks. Filter-based preprocessing techniques can effectively smooth the image to achieve the effect of denoising. However, in some tasks where small objects need to be identified, this method leads to distortion of the small objects resulting in incorrect identification. Threshold-based preprocessing techniques can extract the texture of an image quickly and efficiently, but this method can only be applied to some image segmentation tasks, and the application of this method for other tasks requires subsequent exploration. Morphology-based preprocessing techniques enable quick extraction of regions of interest, but the disadvantages of this method are similar to those of threshold-based preprocessing techniques, which are limited to image segmentation tasks. Histogram-based preprocessing techniques can perfectly resolve the color differences in images and thus better represent the texture features of images. However, it often does not have a good performance on small medical image datasets.

4 Image segmentation

In this section, we have summarized the segmentation tasks in LHIA. Segmentation is a crucial step in image processing applications, which identifies ROIs, including nuclei, glands, and lymphocytes. The ROIs include follicular regions, lymphocytes, CBs, centrocytes, and neoplastic cells in lymphoma images. It is essential to correlate accurate identification of ROIs with pathologies (Ong et al. 1996).

4.1 Threshold-based segmentation methods

Image thresholding is a widely used segmentation technique that generates binary images by selecting a reasonable threshold and the workflow is shown in Fig. 16. Table 9 shows the references using threshold-based segmentation methods. In Sertel et al. (2008b, 2009), Michail et al. (2014b) and Dimitropoulos et al. (2014), the threshold value is selected by manual selection. In the work of Zorman et al. (2007), Belkacem-Boussaid et al. (2009), Codella et al. (2016), Neuman et al. (2010) and Mandyartha et al. (2020), it is selected by adaptive selection algorithm.

4.2 Clustering-based segmentation methods

In the clustering algorithm, the goal is to group similar things. The clustering algorithm usually does not use training data for learning, called unsupervised learning in ML. Contrary to supervised segmentation methods, unsupervised segmentation does not train a classifier. Unsupervised segmentation groups the pixels of an image into several clusters based on similar pixels with a property (color, texture, intensity value, etc.). The commonly used clustering-based segmentation method is k-means clustering (Sertel et al. 2008a, b, 2009; Oztan et al. 2012; Samsi et al. 2010, 2012; Han et al. 2010; Shi et al. 2016; Arora 2013). Table 10 shows the references using clustering-based segmentation methods and the workflow of clustering segmentation is shown in Fig. 17.

4.3 Region-based segmentation methods

Watershed algorithm is a segmentation method based on region, which segments ROIs by finding watershed lines. In the watershed algorithm, grey-level images are considered a topographic. The grey level of a pixel corresponds to its elevation, and the high grey level corresponds to a mountain, the low grey level corresponds to the valley. When the water level rises to a certain height, the water will overflow the current valley. A dam can be built on the watershed to avoid the collection of water in two valleys. Therefore, the image is divided into 2-pixel sets; one is the valley pixel set submerged by water, and the other is the watershed line pixel set. In the end, the lines formed by these dams partition the entire image to achieve the segmentation result of the image (Fig. 18).

In the work of Neuman et al. (2010), a watershed is applied to segment nuclei as the second step of segmentation. In addition, multiple follicles may merge into one region in the process of k-means clustering. Therefore, the iterative watershed algorithm is used to split the overlap** regions until the segmentation ends (Samsi et al. 2010). Furthermore, in Zarella et al. (2015), a method for predicting axillary lymph nodes is proposed, which employs a watershed transform for segmenting candidate nuclei and uses SVM for detection.

4.4 Deep learning based segmentation methods

In addition to some traditional segmentation methods, Swiderska-Chadaj et al. (2019), Bándi et al. (2019), Senaras et al. (2019), Gunesli et al. (2022) and Wu et al. (2022) use deep learning segmentation techniques in LHIA and the workflow is shown in Fig. 19.

In the work of Swiderska-Chadaj et al. (2019), four deep learning methods are used in automatically detecting lymphocytes of histopathology images. In Bándi et al. (2019), a fully convolutional neural network (FCNN) is applied for differentiating tissue and background regions for avoiding low-efficient scanning in empty background areas. A method which is used to automatically detect follicles by U-Net in CD8 stained FL images is developed in Senaras et al. (2019). In the work of Gunesli et al. (2022), DLBCL-Morph dataset is segmented utilizing a Morph-Net model based on Hover-Net. In Wu et al. (2022), a genetic algorithm is applied to address the problem of overlap** lymphocytes.

4.5 Other segmentation methods

In addition to the above segmentation methods, some other segmentation methods also achieve good results.

In the work of Belkacem-Boussaid et al. (2010c), a technique is developed to segment the follicular regions in the H &E stained FL images which based on an active contour model and is initialized through users manually selecting seed points in follicular regions. Vertex graph algorithm is proposed to separate touching cells (Yang et al. 2008). In the work of Basavanhally et al. (2008), a Bayesian classifier and template matching segmentation method is proposed to automatically detect lymphocytes. Figure 20 shows the result of the proposed segmentation method. In Sertel et al. (2010b), an image analysis system using mean-shift algorithm and morphological operations is designed to quantitatively evaluate digitized FL tissue slides. Figure 21 shows the process of segmentation. In the work of Cheng et al. (2010), a multi-phase level set framework to avoids the vacuum and overlap problems is used to initial segmentation of cells. In the method, n = 4 phase segmentation is selected to separate the ROIs for there are four regions with different intensity levels in original images. In Sertel et al. (2010a), a computer-aided detection system which used to automatically detect CB cells in FL images is proposed. In the work of Kong et al. (2011a), an integrated framework is proposed, which comprises an algorithm of supervised cell-image segmentation and a method of splitting touching-cell. In Kong et al. (2011b), a new algorithm is designed for splitting touching/overlap** cells. In the work of Oger et al. (2012), a framework is proposed for segmenting follicular regions before histological grading. Furthermore, A system which can automatically differentiate the categories for diffuse lymphoma cells is designed using minimum variance quantization approach. Es Negm et al. (2017).

a Original image. b Voronoi diagram. c Delaunay triangulation. d Minimum spanning tree showed in segmented lymphocytes. The figure corresponds to Fig. 3 in Basavanhally et al. (2008)

a Original image. b The segmentation result of mean-shift. c The final result. The figure corresponds to Figs. 1 and 2 in Sertel et al. (2010b)

Unsupervised learning methods tend to demonstrate excellent performance in smal-scale dataset. In the work of Tosta et al. (2017a), an unsupervised method for segmenting nuclear components is proposed. Additionally, Tosta et al. (2018) introduce a genetic unsupervised algorithm to obtain object diagnoses. This algorithm segments nuclei by evaluating various genetic algorithms’ fitness functions. SVM is utilized for classification, using the features extracted from the segmented regions. In Cheikh et al. (2017), a fast superpixel segmentation algorithm is applied to prevent overlap. Dimitropoulos et al. (2017) develops a framework for detecting and classifying CBs in FL images stained with H&E and PAX5.

4.6 Summary

In summary, advantages and limitations of the above image segmentation techniques are as follows. Threshold-based, clustering-based and region-based segmentation methods are unsupervised training methods which can quickly segment the contours of the region to be obtained, but they are not effective in identifying small regions and are susceptible to the influence of noise points. Deep learning based segmentation methods are supervised training and have good segmentation results with pixel-level labels datasets, but pixel-by-pixel labeling images requires a lot of time. From the above summary of the segmentation in LHIA, the threshold-based segmentation methods and k-means clustering-based segmentation methods in the traditional methods are more commonly used. In terms of deep learning, U-net is an efficient segmentation method, and FCNN can also achieve similar performance to U-net with low computational cost. In addition to the traditional and deep learning segmentation methods, some other segmentation methods also achieve good performance.

5 Feature extraction

In image processing tasks, it is expected to extract features from the output of the image segmentation. Feature extraction is a crucial step in histopathology image analysis, which is a process of extracting features of the object from segmented images. Table 11 shows the commonly used feature extraction methods, including traditional and deep learning-based methods.

5.1 Visual features extraction

The visual features of an image include color, shape, texture.

5.1.1 Color features extraction

Color features belong to the internal features of an image and describe the surface properties of images. Color features are widely used in CAD system.

In the field of feature extraction, different color spaces are used to extract features from images. In Zorman et al. (2007, 2011), Akakin and Gurcan (2012), Acar et al. (2013), Shi et al. (2016) and Bianconi et al. (2020) in which the RGB color space is used to extract various features such as color intensity, histograms, and statistical measures. In addition, the HSV color space in Sertel et al. (2008a), Samsi et al. (2010), Han et al. (2010), Oger et al. (2012), Akakin and Gurcan (2012), Zarella et al. (2015) and Samsi et al. (2010, 2012) were used for clustering and feature extraction, with special attention to the H-channel. \(L^*u^*v^*\) color space in Sertel et al. (2010b), Belkacem-Boussaid et al. (2010b) and Akakin and Gurcan (2012) helps to construct the feature space and extract morphological features. Finally, in Meng et al. (2010, 2013), Kong et al. (2011a) and Fauzi et al. (2015), images were segmented into chunks to extract color histogram features.

5.1.2 Texture features extraction

Texture feature extraction methods have been developed. Techniques such as Self-Organizing Feature Map**s (SOFMs) (Sertel et al. 2008b, 2009), Gray-Level Coevolutionary Matrices (GLCM) (Sertel et al. 2008a; Samsi et al. 2010, 2012; Oger et al. 2012; Oztan et al. 2012; Bianconi et al. 2020), Gabor features (Cheng et al. 2010; Meng et al. 2013; Acar et al. 2013; Sandhya et al. 2013) and other methods (Sertel et al. 2010a; Michail et al. 2014a; Shi et al. 2016; Di Ruberto et al. 2015; Fatakdawala et al. 2010; Kong et al. 2011a) have been improved for better texture and color quantization analysis. SOFMs are used for nonlinear color quantization, while GLCM helps in extracting texture features from the quantized color space. In addition, principal component analysis (PCA) is applied in different color spaces (RGB, HSI, \(L^*a^*b^*\)) to extract color texture features (Belkacem-Boussaid et al. 2009, 2010a, b). These methods play a key role in feature extraction for automatic cell recognition, tissue analysis, and the construction of feature sets for classification tasks using classifiers such as SVM and QDA. The effectiveness of these techniques is validated by the high classification accuracy in various studies (Fig. 22).

The key points retrieved by SIFT (color online). a Key points without morphological refining. b Key points after morphological refining. The figure corresponds to Fig. 4 in Kuo et al. (2014)

5.1.3 Shape feature extraction

In the work of Yang et al. (2008), the contour of boundary of the touching cells is extracted by the followed steps: First, L\(_2\)E robust estimation is applied to produce a rough estimation for the boundaries. Second, a robust gradient vector flow extracts the contour from the background.

In Sertel et al. (2008a) and Cooper et al. (2009), the size of a cell, eccentricity are extracted for feature construction. In Belkacem-Boussaid et al. (2009), the area and perimeter of cells are extracted. In Sertel et al. (2010b), area and nuclear to cytoplasm ratio are extracted in the feature vector. In Belkacem-Boussaid et al. (2010b), the largest area is extracted through Otsu thresholding, opening, closing, labeling and area classification. In Oztan et al. (2012), the morphological features extracted include the length of major axes, length of minor axes and area of ellipses. In Arora (2013), two features are extracted from segmented images, which include curvature and center of the nuclei. In Sandhya et al. (2013), the extracted shape features include area, bounding box, perimeter, convex area, solidity, major axes and minor axes. In Zarella et al. (2015), six features are extracted including perimeter, area, aspect-ratio, circularity and two measurements quantifying the shape of nuclei. In Wang et al. (2022a), and deep learning based CT image analytics protocol to identify lung adenocarcinoma category and high-risk tumor area (Chen et al. 2022b). No matter from the aspects of image pre-processing, feature extraction and selection, segmentation, and classification, or from the aspects of MV method design and proposed framework idea, the methods of MV summarized in this review can bring a new perspective to the research in other fields.

References

Acar E, Lozanski G, Gurcan M (2013) Tensor-based computation and modeling in multi-resolution digital pathology imaging: application to follicular lymphoma grading. In: Medical Imaging 2013: digital pathology, vol 8676. International Society for Optics and Photonics, p 867603

Ahmad M, Ahmed I, Ouameur M, Jeon G (2022) Classification and detection of cancer in histopathologic scans of lymph node sections using convolutional neural network. Neural Process Lett 55:3763–3778

Akakin H, Gurcan M (2012) Content-based microscopic image retrieval system for multi-image queries. IEEE Trans Inf Technol Biomed 16(4):758–769

Alom M, Aspiras T, Taha T et al (2019) Advanced deep convolutional neural network approaches for digital pathology image analysis: a comprehensive evaluation with different use cases. ar**v preprint. ar**v:1904.09075

Angulo J, Klossa J, Flandrin G (2006) Ontology-based lymphocyte population description using mathematical morphology on colour blood images. Cell Mol Biol (Noisy-le-grand) 52(6):2–15

Arevalo J, Cruz-Roa A, González FA (2014) Histopathology image representation for automatic analysis: a state-of-the-art review. Rev Med 22(2):79–91

Arora B (2013) Computer assisted grading schema for follicular lymphoma based on level set formulation. In: Proceedings of SCES 2013. IEEE, Monterey, pp 1–6

Azevedo Tosta T, de Faria P, Neves L et al (2021) Evaluation of statistical and Haralick texture features for lymphoma histological images classification. Comput Methods Biomech Biomed Eng Imaging Vis 9(6):1–12

Bai J, Jiang H, Li S, Ma X (2019) NHL pathological image classification based on hierarchical local information and GoogleNet-based representations. BioMed Res Int 2019:1065652

Bándi P, Balkenhol M, van Ginneken B et al (2019) Resolution-agnostic tissue segmentation in whole-slide histopathology images with convolutional neural networks. PeerJ 7:e8242

Basavanhally A, Agner S, Alexe G et al (2008) Manifold learning with graph-based features for identifying extent of lymphocytic infiltration from high grade, Her2+ breast cancer histology. In: Proceedings of MICCAI 2008

Basavanhally A, Ganesan S, Agner S et al (2009) Computerized image-based detection and grading of lymphocytic infiltration in Her2+ breast cancer histopathology. IEEE Trans Biomed Eng 57(3):642–653

Basu S, Agarwal R, Srivastava V (2022) Deep discriminative learning model with calibrated attention map for the automated diagnosis of diffuse large b-cell lymphoma. Biomed Signal Process Control 76:103728

Belkacem-Boussaid K, Sertel O, Lozanski G et al (2009) Extraction of color features in the spectral domain to recognize centroblasts in histopathology. In: Proceedings of EMBS 2009. IEEE, pp 3685–3688

Belkacem-Boussaid K, Pennell M, Lozanski G et al (2010a) Computer-aided classification of centroblast cells in follicular lymphoma. Anal Quant Cytol Histol 32(5):254–260

Belkacem-Boussaid K, Pennell M, Lozanski G et al (2010b) Effect of pathologist agreement on evaluating a computer-aided assisted system: recognizing centroblast cells in follicular lymphoma cases. In: 2010 IEEE international symposium on biomedical imaging: from nano to macro. IEEE, pp 1411–1414

Belkacem-Boussaid K, Prescott J, Lozanski G, Gurcan M (2010c) Segmentation of follicular regions on H &E slides using a matching filter and active contour model. In: Medical imaging 2010: computer-aided diagnosis, vol 7624. International Society for Optics and Photonics, p 762436

Belsare A, Mushrif M (2012) Histopathological image analysis using image processing techniques: an overview. Signal Image Process 3(4):23

BenTaieb A, Hamarneh G (2018) Predicting cancer with a recurrent visual attention model for histopathology images. In: Proceedings of MICCAI 2018. Springer, Cham, pp 129–137

Bera K, Schalper K, Rimm D et al (2019) Artificial intelligence in digital pathology—new tools for diagnosis and precision oncology. Nat Rev Clin Oncol 16(11):703–715

Bergeron S (2017) Investigation into feasibility of color and texture features for automated detection of lymph node metastases in histopathological images. Retrieved from the University of Minnesota Digital Conservancy https://hdl.handle.net/11299/188816

Bianconi F, Kather J, Reyes-Aldasoro C (2020) Experimental assessment of color deconvolution and color normalization for automated classification of histology images stained with hematoxylin and eosin. Cancers 12(11):3337

Bollschweiler E, Mönig S, Hensler K et al (2004) Artificial neural network for prediction of lymph node metastases in gastric cancer: a phase ii diagnostic study. Ann Surg Oncol 11(5):506–511

Bowen JM, Perry AM, Laurini JA et al (2014) Lymphoma diagnosis at an academic centre: rate of revision and impact on patient care. Br J Haematol 166(2):202–208

Brancati N, De Pietro G, Frucci M, Riccio D (2019) A deep learning approach for breast invasive ductal carcinoma detection and lymphoma multi-classification in histological images. IEEE Access 7:44709–44720

Budiharto W, Irwansyah E, Suroso JS, Gunawan AAS (2020) Design of object tracking for military robot using pid controller and computer vision. ICIC Express Lett 14(3):289–294

Cai TW, Kim J, Feng DD (2008) Content-based medical image retrieval. In: Biomedical information technology. Elsevier, Amsterdam, pp 83–113

Camelyon16: Camelyon Grand Challenge 2016 (2016). https://camelyon17.grand-challenge.org/

Candelero D, Roberto G, do Nascimento M et al (2020) Selection of CNN, Haralick and fractal features based on evolutionary algorithms for classification of histological images. In: Proceedings of BIBM 2020. IEEE, pp 2709–2716

Cheikh B, Elie N, Plancoulaine B et al (2017) Spatial interaction analysis with graph based mathematical morphology for histopathology. In: Proceedings of ISBI 2017. IEEE, pp 813–817

Chen J, Srinivas C (2016) Automatic lymphocyte detection in h &e images with deep neural networks. ar**v preprint. ar**v:1612.03217

Chen H, Li C, Wang G et al (2022a) Gashis-transformer: a multi-scale visual transformer approach for gastric histopathological image detection. Pattern Recogn 130:108827

Chen L, Qi H, Lu D et al (2022b) Machine vision-assisted identification of the lung adenocarcinoma category and high-risk tumor area based on ct images. Patterns 3(4):100464

Chen W, Meer P, Georgescu B et al (2005) Image mining for investigative pathology using optimized feature extraction and data fusion. Comput Methods Programs Biomed 79(1):59–72

Chen R, **g Y, Jackson H (2016) Identifying metastases in sentinel lymph nodes with deep convolutional neural networks. ar**v preprint. ar**v:1608.01658

Chen L, Qi H, Lu D et al (2022) A deep learning based CT image analytics protocol to identify lung adenocarcinoma category and high-risk tumor area. STAR protocols 3(3):101485

Cheng J, Veronika M, Rajapakse J (2010) Identifying cells in histopathological images. In: Proceedings of ICPR 2010. Springer, Berlin, pp 244–252

Codella N, Moradi M, Matasar M et al (2016) Lymphoma diagnosis in histopathology using a multi-stage visual learning approach. In: Medical Imaging 2016: digital pathology, vol 9791. International Society for Optics and Photonics, p 97910H

Cooper L, Sertel O, Kong J et al (2009) Feature-based registration of histopathology images with different stains: an application for computerized follicular lymphoma prognosis. Comput Methods Programs Biomed 96(3):182–192

Cruz JA, Wishart DS (2006) Applications of machine learning in cancer prediction and prognosis. Cancer Informatics 2:117693510600200030

Di Ruberto C, Fodde G, Putzu L (2015) On different colour spaces for medical colour image classification. In: Proceedings of CAIP 2015. Springer, Dordrecht, pp 477–488

Dif N, Elberrichi Z (2020) Efficient regularization framework for histopathological image classification using convolutional neural networks. In: Proceedings of IJCINI 2020, vol 14(4), pp 62–81

Dif N, Attaoui M, Elberrichi Z et al (2021) Transfer learning from synthetic labels for histopathological images classification. Appl Intell 52(1):358–377

Dimitropoulos K, Michail E, Koletsa T et al (2014) Using adaptive neuro-fuzzy inference systems for the detection of centroblasts in microscopic images of follicular lymphoma. SIViP 8(1):33–40

Dimitropoulos K, Barmpoutis P, Koletsa T et al (2017) Automated detection and classification of nuclei in Pax5 and H&E-stained tissue sections of follicular lymphoma. SIViP 11(1):145–153

do Nascimento M, Martins A, Tosta T, Neves L (2018) Lymphoma images analysis using morphological and non-morphological descriptors for classification. Comput Methods Programs Biomed 163:65–77

Duran-Lopez L, Dominguez-Morales J, Conde-Martin A et al (2020) Prometeo: a CNN-based computer-aided diagnosis system for WSI prostate cancer detection. IEEE Access 8:128613–128628

El Achi H, Belousova T, Chen L et al (2019) Automated diagnosis of lymphoma with digital pathology images using deep learning. Annals of Clinical & Laboratory Science 49(2):153–160

Engert A, Vassilakopoulos TP (2016) Hodgkin lymphoma. Springer, Cham

Es Negm A, Kandil AH, Hassan OAEF (2017) Decision support system for lymphoma classification. Curr Med Imaging 13(1):89–98

Fanizzi A, Lorusso V, Biafora A et al (2021) Sentinel lymph node metastasis on clinically negative patients: preliminary results of a machine learning model based on histopathological features. Appl Sci 11(21):10372

Fatakdawala H, Xu J, Basavanhally A et al (2010) Expectation-maximization-driven geodesic active contour with overlap resolution (EMAGACOR): application to lymphocyte segmentation on breast cancer histopathology. IEEE Trans Biomed Eng 57(7):1676–1689

Fauzi M, Pennell M, Sahiner B et al (2015) Classification of follicular lymphoma: the effect of computer aid on pathologists grading. BMC Med Inform Decis Mak 15(1):1–10

Feller AC, Diebold J (2003) Histopathology of nodal and extranodal non-Hodgkin’s lymphomas. Springer, Berlin

Greenspan H, Van Ginneken B, Summers RM (2016) Guest editorial deep learning in medical imaging: overview and future promise of an exciting new technique. IEEE Trans Med Imaging 35(5):1153–1159

Gunesli G, Jewsbury R, Raza S, Rajpoot N (2022) Morph-Net: end-to-end prediction of nuclear morphological features from histology images. In: Medical optical imaging and virtual microscopy image analysis: first international workshop, MOVI 2022, held in conjunction with MICCAI 2022, Singapore, 18 September 2022, Proceedings. Springer, Cham, pp 136–144

Gurcan MN, Boucheron LE, Can A et al (2009) Histopathological image analysis: a review. IEEE Rev Biomed Eng 2:147–171

Han J, Shin D, Arthur G, Shyu C (2010) Multi-resolution tile-based follicle detection using color and textural information of follicular lymphoma ihc slides. In: Proceedings of BIBMW 2010. IEEE, pp 866–867

Harmon S, Sanford T, Brown G et al (2020) Multiresolution application of artificial intelligence in digital pathology for prediction of positive lymph nodes from primary tumors in bladder cancer. JCO Clin Cancer Informatics 4:367–382

Hashimoto N, Fukushima D, Koga R et al (2020) Multi-scale domain-adversarial multiple-instance CNN for cancer subtype classification with non-annotated histopathological images. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3852–3861

He L, Long LR, Antani S, Thoma GR (2012) Histology image analysis for carcinoma detection and grading. Comput Methods Programs Biomed 107(3):538–556

Hewitson TD, Darby IA (2010) Histology protocols. Springer, Berlin

Holten-Rossing H (2018) Digitalization in pathology: diagnostics of cancer in a faster and safer way. PhD thesis

Huang Y, Xue Y, Lan J et al (2021) Deep learning framework for detecting positive lymph nodes of gastric cancer on histopathological images. In: 2021 6th International conference on biomedical imaging, signal processing, pp 14–23

Huang H, Huang S, Du Q (2023) Evaluation of soil heavy metal pollution based on k-means and SVM. Int J Environ Sci Technol 11:12015–12024

International Agency for Research on Cancer. http://gco.iarc.fr/

Irshad H, Veillard A, Roux L et al (2013) Methods for nuclei detection, segmentation, and classification in digital histopathology: a review—current status and future potential. IEEE Rev Biomed Eng 7:97–114

Isabelle M, Rogers KD, Stone N (2010) Correlation map**: rapid method for identification of histological features and pathological classification in mid infrared spectroscopic images of lymph nodes. J Biomed Opt 15(2):026030

Ishikawa T, Takahashi J, Takemura H et al (2014) Gastric lymph node cancer detection using multiple features support vector machine for pathology diagnosis support system. In: Proceedings of ICBE 2014. Springer, Cham, pp 120–123

Jahanbakhshi A, Abbaspour-Gilandeh Y, Heidarbeigi K, Momeny M (2021a) A novel method based on machine vision system and deep learning to detect fraud in turmeric powder. Comput Biol Med 136:104728

Jahanbakhshi A, Abbaspour-Gilandeh Y, Heidarbeigi K, Momeny M (2021b) Detection of fraud in ginger powder using an automatic sorting system based on image processing technique and deep learning. Comput Biol Med 136:104764

Jahanbakhshi A, Momeny M, Mahmoudi M, Radeva P (2021c) Waste management using an automatic sorting system for carrot fruit based on image processing technique and improved deep neural networks. Energy Rep 7:5248–5256

Jamaluddin M, Fauzi M, Abas F (2017) Tumor detection and whole slide classification of H&E lymph node images using convolutional neural network. In: Proceedings of ICSIPA 2017. IEEE, pp 90–95

Janiesch C, Zschech P, Heinrich K (2021) Machine learning and deep learning. Electron Mark 31(3):685–695

Jiang H, Li Z, Li S, Zhou F (2018) An effective multi-classification method for nhl pathological images. In: Proceedings of SMC 2018. IEEE, pp 763–768

Jothi J, Rajam V (2017) A survey on automated cancer diagnosis from histopathology images. Artif Intell Rev 48(1):31–81

Kandel I, Castelli M (2020) A novel architecture to classify histopathology images using convolutional neural networks. Appl Sci 10(8):2929

Ker J, Wang L, Rao J, Lim T (2017) Deep learning applications in medical image analysis. IEEEAccess 6:9375–9389

Kong J, Sertel O, Shimada H et al (2008) A new combined computer-aided prognosis system for classifying neuroblastoma stromal development and grading neuroblastic differentiation. Rapport Tech Ohio State Univ 25:26

Kong H, Gurcan M, Belkacem-Boussaid K (2011a) Partitioning histopathological images: an integrated framework for supervised color-texture segmentation and cell splitting. IEEE Trans Med Imaging 30(9):1661–1677

Kong H, Gurcan M, Belkacem-Boussaid K (2011b) Splitting touching-cell clusters on histopathological images. In: 2011 IEEE international symposium on biomedical imaging: from nano to macro. IEEE, pp 208–211

Kourou K, Exarchos TP, Exarchos KP et al (2015) Machine learning applications in cancer prognosis and prediction. Comput Struct Biotechnol J 13:8–17

Kumar A, Kim J, Cai W et al (2013) Content-based medical image retrieval: a survey of applications to multidimensional and multimodality data. J Digit Imaging 26(6):1025–1039

Kumar M, Babaie M, Zhu S et al (2017) A comparative study of cnn, bovw and lbp for classification of histopathological images. In: Proceedings of SSCI 2017. IEEE, pp 1–7

Kuo Y, Ko C, Lee M (2014) Lymphatic infiltration detection in breast cancer H&E image prior to lymphadenectomy. Biomed Eng Appl Basis Commun 26(04):1440007

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Lee JH, Baek JH, Kim JH et al (2018) Deep learning-based computer-aided diagnosis system for localization and diagnosis of metastatic lymph nodes on ultrasound: a pilot study. Thyroid 28(10):1332–1338

Li Y, ** W (2018) Cancer metastasis detection with neural conditional random field. ar**v preprint. ar**v:1806.07064

Li L, Pan X, Yang H et al (2020a) Multi-task deep learning for fine-grained classification and grading in breast cancer histopathological images. Multimedia Tools Appl 79(21):14509–14528

Li D, Bledsoe J, Zeng Y et al (2020b) A deep learning diagnostic platform for diffuse large b-cell lymphoma with high accuracy across multiple hospitals. Nat Commun 11(1):1–9

Lin H, Chen H, Dou Q et al (2018) SCANNET: a fast and dense scanning framework for metastastic breast cancer detection from whole-slide image. In: Proceedings of WACV 2018. IEEE, pp 539–546

Linder N, Taylor J, Colling R et al (2019) Deep learning for detecting tumour-infiltrating lymphocytes in testicular germ cell tumours. J Clin Pathol 72(2):157–164

Litjens G, Sánchez CI, Timofeeva N et al (2016) Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci Rep 6(1):1–11

Litjens G, Kooi T, Bejnordi Babak E et al (2017) A survey on deep learning in medical image analysis. Med Image Anal 42:60–88

Liu Y, Gadepalli K, Norouzi M et al (2017) Detecting cancer metastases on gigapixel pathology images. ar**v preprint. ar**v:1703.02442

Loukas CG, Linney A (2004) A survey on histological image analysis-based assessment of three major biological factors influencing radiotherapy: proliferation, hypoxia and vasculature. Comput Methods Programs Biomed 74(3):183–199

Lucas M, Jansen I, Savci-Hei**k C et al (2019) Deep learning for automatic gleason pattern classification for grade group determination of prostate biopsies. Virchows Arch 475(1):77–83

Madabhushi A, Lee G (2016) Image analysis and machine learning in digital pathology: challenges and opportunities. Med Image Anal 33:170–175

Mandyartha E, Anggraeny F, Muttaqin F, Akbar F (2020) Global and adaptive thresholding technique for white blood cell image segmentation. J Phys Conf Ser 1569:022054

Martins AS, Neves LA, Faria PR et al (2019) Colour feature extraction and polynomial algorithm for classification of lymphoma images. In: Iberoamerican congress on pattern recognition. Springer, Berlin, pp 262–271

Martins A, Neves L, de Faria P et al (2021) A hermite polynomial algorithm for detection of lesions in lymphoma images. Pattern Anal Appl 24(2):523–535

Matasar M, Shi W, Silberstien J et al (2012) Expert second-opinion pathology review of lymphoma in the era of the world health organization classification. Ann Oncol 23(1):159–166

Meng T, Lin L, Shyu M, Chen S (2010) Histology image classification using supervised classification and multimodal fusion. In: 2010 IEEE international symposium on multimedia. IEEE, pp 145–152

Meng T, Shyu M, Lin L (2013) Multimodal information integration and fusion for histology image classification. In: Multimedia data engineering applications and processing. IGI Global, Pennsylvania, pp 35–50

Michail E, Dimitropoulos K, Koletsa T et al (2014a) Morphological and textural analysis of centroblasts in low-thickness sliced tissue biopsies of follicular lymphoma. In: Proceedings of EMBS 2014. IEEE, pp 3374–3377

Michail E, Kornaropoulos E, Dimitropoulos K et al (2014b) Detection of centroblasts in H&E stained images of follicular lymphoma. In: Proceedings of SIU 2014. IEEE, pp 2319–2322

Miyoshi H, Sato K, Kabeya Y et al (2020) Deep learning shows the capability of high-level computer-aided diagnosis in malignant lymphoma. Lab Invest 100(10):1300–1310

Mohlman J, Leventhal S, Hansen T et al (2020) Improving augmented human intelligence to distinguish burkitt lymphoma from diffuse large b-cell lymphoma cases. Am J Clin Pathol 153(6):743–759

Neuman U, Korzynska A, Lopez C, Lejeune M (2010) Segmentation of stained lymphoma tissue section images. In: Information technologies in biomedicine. Springer, Berlin, pp 101–113

Nugaliyadde A, Wong K, Parry J et al (2020) RCNN for region of interest detection in whole slide images. In: Proceedings of ICNIP 2020. Springer, Cham, pp 625–632

Obermeyer Z, Emanuel EJ (2016) Predicting the future-big data, machine learning, and clinical medicine. N Engl J Med 375(13):1216

Oger M, Belhomme P, Gurcan M (2012) A general framework for the segmentation of follicular lymphoma virtual slides. Comput Med Imaging Graph 36(6):442–451

Ong S, ** X, Sinniah R et al (1996) Image analysis of tissue sections. Comput Biol Med 26(3):269–279

Orlov N, Chen W, Eckley D et al (2010) Automatic classification of lymphoma images with transform-based global features. IEEE Trans Inf Technol Biomed 14(4):1003–1013

Oweis R, Hijazi L (2006) A computer-aided ECG diagnostic tool. Comput Methods Programs Biomed 81(3):279–284

Oztan B, Kong H, Gürcan M, Yener B (2012) Follicular lymphoma grading using cell-graphs and multi-scale feature analysis. In: Medical imaging 2012: computer-aided diagnosis, vol 8315. International Society for Optics and Photonics, p 831516

Pannu HK, Wang KP, Borman TL, Bluemke DA (2000) MR imaging of mediastinal lymph nodes: evaluation using a superparamagnetic contrast agent. J Magn Reson Imaging 12(6):899–904

Pathologique L (2017) Impact of expert pathologic review of lymphoma diagnosis: study of patients from the french lymphopath network. J Clin Oncol 35:2008–2017

Patrício DI, Rieder R (2018) Computer vision and artificial intelligence in precision agriculture for grain crops: a systematic review. Comput Electron Agric 153:69–81

Prakash S, Harshitha K, Charitha A et al (2023) Computer-aided diagnosis using machine learning techniques. In: Proceedings of CSMDI 2023. IEEE, pp 387–391

Rangayyan RM, Ayres FJ, Desautels JL (2007) A review of computer-aided diagnosis of breast cancer: toward the detection of subtle signs. J Franklin Inst 344(3–4):312–348

Reeves AP, Kostis WJ (2000) Computer-aided diagnosis for lung cancer. Radiol Clin 38(3):497–509

Ribeiro MG, Neves LA, Roberto GF et al (2018) Analysis of the influence of color normalization in the classification of non-Hodgkin lymphoma images. In: Proceedings of SIBGRAPI 2018. IEEE, pp 369–376

Roberto GF, Neves LA, Nascimento MZ et al (2017) Features based on the percolation theory for quantification of non-hodgkin lymphomas. Comput Biol Med 91:135–147

Roberto G, Lumini A, Neves L et al (2021) Fractal neural network: a new ensemble of fractal geometry and convolutional neural networks for the classification of histology images. Expert Syst Appl 166:114103

Robertson S, Azizpour H, Smith K, Hartman J (2018) Digital image analysis in breast pathology—from image processing techniques to artificial intelligence. Transl Res 194:19–35

Rubinstein SM, Bhutani D, Lynch RC et al (2022) Patients recently treated for b-lymphoid malignancies show increased risk of severe covid-19. Blood Cancer Discov 3(3):181–193

Samsi SS (2012) Computer aided analysis of IHC and H&E stained histopathological images in lymphoma and lupus. PhD thesis, The Ohio State University

Samsi S, Lozanski G, Shanarah A et al (2010) Detection of follicles from ihc-stained slides of follicular lymphoma using iterative watershed. IEEE Trans Biomed Eng 57(10):2609–2612

Samsi S, Krishnamurthy A, Gurcan M (2012) An efficient computational framework for the analysis of whole slide images: application to follicular lymphoma immunohistochemistry. J Comput Sci 3(5):269–279

Sandhya B, Chayadevi M, Anitha P (2013) Automated classification of centroblast cells using morphological and texture features. Int J Comput Appl. https://doi.org/10.5120/12474-8869

Saxena P, Singh S, Agrawal P (2013) Texture classification of biased cytoplasmic tissue sample from histopathological imagery by Gabor application. J Netw Innov Comput 1:248–259

Schäfer T, Schäfer H, Schmitz A et al (2013) Image database analysis of hodgkin lymphoma. Comput Biol Chem 46:1–7

Schmitz A, Schäfer T, Schäfer H et al (2012) Automated image analysis of hodgkin lymphoma. ar**v preprint. ar**v:1209.3189

Senaras C, Pennell M, Chen W et al (2017) Foxp3-stained image analysis for follicular lymphoma: optimal adaptive thresholding with maximal nucleus coverage. In: Medical imaging 2017: digital pathology, vol 10140. International Society for Optics and Photonics, p 101400E

Senaras C, Niazi M, Arole V et al (2019) Segmentation of follicles from cd8-stained slides of follicular lymphoma using deep learning. In: Medical imaging 2019: digital pathology, vol 10956. International Society for Optics and Photonics, p 109560R

Sertel O, Kong J, Lozanski G et al (2008a) Computerized microscopic image analysis of follicular lymphoma. In: Medical imaging 2008: computer-aided diagnosis, vol 6915. International Society for Optics and Photonics, p 691535

Sertel O, Kong J, Lozanski G et al (2008b) Texture classification using nonlinear color quantization: application to histopathological image analysis. In: Proceedings of ICASSP 2008. IEEE, pp 597–600

Sertel O, Kong J, Catalyurek U et al (2009) Histopathological image analysis using model-based intermediate representations and color texture: follicular lymphoma grading. J Signal Process Syst 55(1):169–183

Sertel O, Lozanski G, Shana’ah A, Gurcan M (2010a) Computer-aided detection of centroblasts for follicular lymphoma grading using adaptive likelihood-based cell segmentation. IEEE Trans Biomed Eng 57(10):2613–2616

Sertel O, Catalyurek U, Lozanski G et al (2010b) An image analysis approach for detecting malignant cells in digitized H&E-stained histology images of follicular lymphoma. In: Proceedings of ICPR 2010. IEEE, pp 273–276

Shamir N, Orlov L, Eckley D et al (2008) IICBU 2008: a proposed benchmark suite for biological image analysis. Med Biol Eng Comput 46(9):943–947

Sheng B, Zhou M, Hu M et al (2020) A blood cell dataset for lymphoma classification using faster R-CNN. Biotechnol Biotechnol Equip 34(1):413–420

Shi P, Zhong J, Huang R, Lin J (2016) Automated quantitative image analysis of hematoxylin-eosin staining slides in lymphoma based on hierarchical kmeans clustering. In: 2016 8th international conference on information technology in medicine and education (ITME). IEEE, pp 99–104

Somaratne U, Wong K, Parry J et al (2019) Improving follicular lymphoma identification using the class of interest for transfer learning. In: Proceedings of DICTA 2019. IEEE, pp 1–7

Song Y, Li Q, Huang H et al (2016) Histopathology image categorization with discriminative dimension reduction of Fisher vectors. In: Proceedings of ECCV 2016. Springer, Berlin, pp 306–317

Song Y, Li Q, Huang H et al (2017) Low dimensional representation of fisher vectors for microscopy image classification. IEEE Trans Med Imaging 36(8):1636–1649

Steinbuss G, Kriegsmann M, Zgorzelski C et al (2021) Deep learning for the classification of non-hodgkin lymphoma on histopathological images. Cancers 13(10):2419

Swiderska-Chadaj Z, Pinckaers H, van Rijthoven M et al (2019) Learning to detect lymphocytes in immunohistochemistry with deep learning. Med Image Anal 58:101547

Syrykh C, Abreu A, Amara N et al (2020) Accurate diagnosis of lymphoma on whole-slide histopathology images using deep learning. NPJ Digit Med 3(1):1–8

Tasnim Z, Chakraborty S, Shamrat F et al (2021) Deep learning predictive model for colon cancer patient using cnn-based classification. Int J Adv Comput Sci Appl 12

The National Cancer Institute. http://www.cancer.gov/

The National Institute on Aging. https://www.nia.nih.gov/

Thorat P (2020) Classification of sub-type of lymphoma using deep learning. PhD thesis, Dublin, National College of Ireland

Titoriya A, Sachdeva S (2019) Breast cancer histopathology image classification using alexnet. In: Proceedings of ISCON 2019. IEEE, pp 708–712

Tosta T, Faria P, Neves L et al (2017a) Computational method for unsupervised segmentation of lymphoma histological images based on fuzzy 3-partition entropy and genetic algorithm. Expert Syst Appl 81:223–243

Tosta T, Do Nascimento M, De Faria P, Neves L (2017b) Application of evolutionary algorithms on unsupervised segmentation of lymphoma histological images. In: Proceedings of CBMS 2017. IEEE, pp 89–94

Tosta T, de Faria P, Neves L et al (2018) Fitness functions evaluation for segmentation of lymphoma histological images using genetic algorithm. In: Proceedings of ICAEC 2018. Springer, Cham, pp 47–62

Vrabac D, Smit A, Rojansky R et al (2021) DLBCL-MORPH: morphological features computed using deep learning for an annotated digital dlbcl image set. Sci Data 8(1):135

Wang D, Khosla A, Gargeya R et al (2016) Deep learning for identifying metastatic breast cancer. ar**v preprint. ar**v:1606.05718

Weinstein HJ, Hudson MM, Link MP (2007) Pediatric lymphomas. Springer, Berlin

Wollmann T, Rohr K (2017) Automatic breast cancer grading in lymph nodes using a deep neural network. ar**v preprint. ar**v:1707.07565

Wollmann T, Eijkman C, Rohr K (2018) Adversarial domain adaptation to improve automatic breast cancer grading in lymph nodes. In: Proceedings of ISBI 2018. IEEE, pp 582–585

Wu H, Pang K, Pang G et al (2022) A soft-computing based approach to overlapped cells analysis in histopathology images with genetic algorithm. Appl Soft Comput 126:109279

**ao K, Wang Z, Xu T, Wan T (2017) A deep learning method for detecting and classfying breast cancer metastasis in lymph nodes on histopathological images. https://www.semanticscholar.org/paper/A-DEEP-LEARNING-METHOD-FOR-DETECTING-AND-BREAST-IN-**ao-Wang/72ed2f4b2b464e36f85c70dcf660f4bb9468c64c. Accessed 31 Mar 2019

Xu Y, McKenna RW, Kroft SH (2002) Assessment of cd10 in the diagnosis of small b-cell lymphomas: a multiparameter flow cytometric study. Am J Clin Pathol 117(2):291–300

Yang L, Tuzel O, Meer P, Foran D (2008) Automatic image analysis of histopathology specimens using concave vertex graph. In: Proceedings of MICCAI 2008. Springer, Berlin, pp 833–841

Yildirim M, Cinar A (2021) Classification with respect to colon adenocarcinoma and colon benign tissue of colon histopathological images with a new cnn model: MA_ColonNET. Int J Imaging Syst Technol 32:155–162

Yu W, Li C, Wang R et al (2021) Machine learning based on morphological features enables classification of primary intestinal t-cell lymphomas. Cancers 13(21):5463

Zarella M, Breen D, Reza A et al (2015) Lymph node metastasis status in breast carcinoma can be predicted via image analysis of tumor histology. Anal Quant Cytopathol Histopathol 37(5):273–285

Zhan P, Qin X, Zhang Q, Sun Y (2023) A novel structural damage detection method via multi-sensor spatial-temporal graph-based features and deep graph convolutional network. IEEE Trans Instrum Meas. https://doi.org/10.1109/TIM.2023.3238048

Zhang S, Metaxas D (2016) Large-scale medical image analytics: Recent methodologies, applications and future directions. Med Image Anal 33:98–101

Zhang X, Su H, Yang L, Zhang S (2015) Fine-grained histopathological image analysis via robust segmentation and large-scale retrieval. In Proceedings of CVPR 2015, pp 5361–5368

Zhou X, Li C, Rahaman M et al (2020) A comprehensive review for breast histopathology image analysis using classical and deep neural networks. IEEE Access 8:90931–90956

Zhu H, Jiang H, Siqi Li, Li H, Pei Y (2019) A novel multispace image reconstruction method for pathological image classification based on structural information. BioMed Res Int 2019:3530903

Zorman M, Kokol P, Lenic M et al (2007) Symbol-based machine learning approach for supervised segmentation of follicular lymphoma images. In: Proceedings of CBMS 2007. IEEE, pp 115–120

Zorman M, de la Rosa J, Dinevski D (2011) Classification of follicular lymphoma images: a holistic approach with symbol-based machine learning methods. Wien Klin Wochenschr 123(23):700–709

Acknowledgements

This work is supported by the “National Natural Science Foundation of China” (No. 82220108007), “Scientific Research Fund of SiChuan Provincial Science and Technology Department” (No. 2021YFH0069), “Scientific Research Fund of Chengdu Science and Technology Burea” (No. 2022-YF05-01186-SN, 2022-YF05-01128-SN), “Bei**g **sike Clinical Oncology Research Foundation” (No. Y-tongshu2021/qn-0379), and “Applied Basic Research Program (Youth Project)” (No. 2023JH2/101600016). We also thank Miss Zixian Li and Mr. Guoxian Li for their important discussion. Chen Li and **aoyan Li have the same contributions as corresponding authors in this paper.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

There is no conflicts of interest in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, H., Li, X., Li, C. et al. What can machine vision do for lymphatic histopathology image analysis: a comprehensive review. Artif Intell Rev 57, 71 (2024). https://doi.org/10.1007/s10462-024-10701-w

Published:

DOI: https://doi.org/10.1007/s10462-024-10701-w