Abstract

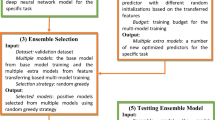

Broad learning system (BLS) demonstrates a novel structure of neural networks based on random vector functional link network (RVFL), which has a faster modeling speed, better generalization ability, higher regression accuracy for solving regression tasks. To improve the feature representation capacity of BLS and guarantee the training efficiency, a novel ensemble deep model based on broad learning system is proposed, named as EDBLS. Unlike other deep or ensemble models of BLS, the results of EDBLS can be calculated by training the model only once. Meanwhile, the performance of BLS mainly depends on its structure, it is the same as EDBLS, we need to select network parameters for different real-world problems. As the lower efficiency of grid search, it is no longer suitable for EDBLS with more parameters. Therefore, coot optimization algorithm (COOT) is applied to determine the network structure of EDBLS for regression problems, it is important to note that the COOT is only a tool for determining the structure of EDBLS. Finally, the experimental results on MNIST, NORB and some regression benchmark datasets show that EDBLS has a better classification and regression performance, COOT is beneficial to select the network parameters of EDBLS for regression.

Similar content being viewed by others

References

Bishop CM (2006) Pattern recognition and machine learning (information science and statistics). Springer, Secaucus, NJ, USA

Breuleux O, Bengio Y, Vincent P (2011) Quickly generating representative samples from an RBM-derived process. Neural Comput 23(8):2058–2073

Chauhan V, Tiwari A (2018) On the construction of hierarchical broad learning neural network: an alternative way of deep learning. In: Proceedings of the IEEE symposium series on computational intelligence. Bengaluru, India, pp 182–188.

Chen CLP, Liu Z (2018) Broad learning system: An effective and efficient incremental learning system without the need for deep architecture. IEEE Trans Neural Netw Learn Syst 29(1):10–24

Chen CLP, Liu Z, Feng S (2019) Universal approximation capability of broad learning system and its structural variations. IEEE Trans Neural Netw Learn Syst 30(4):1191–1204

Chen CLP, Liu Z (2017) Broad learning system: a new learning paradigm and system without going deep. In: IEEE 32nd youth academic annual conference of Chinese association of automation (YAC), Hefei, Anhui, China, pp 1271–1276.

Cheng R, ** Y (2015) A competitive swarm optimizer for large scale optimization. IEEE Trans Cybern 45(2):191–204

Chu Y, Lin H, Shen C (2021) Hyperspectral image classification with discriminative manifold broad learning system. Neurocomputing 442:236–248

Ding S, Sun Y, An Y (2020) Multiple birth support vector machine based on recurrent neural networks. Appl Intell 50(7):2280–2292

Fan X, Zhang S (2019) LPI-BLS: predicting lncRNA-protein interactions with a broad learning system-based stacked ensemble classifier. Neurocomputing 370:88–93

Feng S, Chen CLP (2020) Fuzzy broad learning system: a novel neuro-fuzzy model for regression and classification. IEEE Trans Cybern 50(2):414–424

Gao Y, **e L, Zhang Z (2020) Twin support vector machine based on improved artificial fish swarm algorithm with application to flame recognition. Appl Intell 50(8):2312–2327

Gao Z, Dang W, Liu M (2021) Classification of EEG signals on VEP-based BCI systems with broad learning. IEEE Trans Syst Man Cybern Syst 51(11):7143–7151

Haghnegahdar L, Wang Y (2020) A whale optimization algorithm-trained artificial neural network for smart grid cyber intrusion detection. Neural Comput Appl 32(13):9427–9441

Han T, Zhang L, Yin Z (2021a) Rolling bearing fault diagnosis with combined convolutional neural networks and support vector machine. Measurement 177:109022

Han H, Yang F, Yang H (2021b) Type-2 fuzzy broad learning controller for wastewater treatment process. Neurocomputing 459(12):188–200

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507

Hinton GE, Osindero S, Teh Y-W (2006) A fast learning algorithm for deep belief nets. Neural Comput 18(7):1527–1554

Issa S, Peng Q, You X (2021) Emotion classification using EEG brain signals and the broad learning system. IEEE Trans Syst Man Cybern Syst 51(12):7382–7391

** J, Chen CLP (2018) Regularized robust broad learning system for uncertain data modeling. Neurocomputing 322:58–69

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. Adv Neural Inform Process Syst 25

LeCun Y, Bottou L, Bengio Y (1998) Gradient-based learning applied to document recognition. IEEE 86(11):2278–2324

LeCun Y, Huang FJ, Bottou L (2004) Learning methods for generic object recognition with invariance to pose and lighting. IEEE Conf Comput vis Patt Recogn (CVPR) 2:94–104

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Liu D, Baldi S, Yu W (2022) On training traffic predictors via broad learning structures: a benchmark study. IEEE Trans Syst Man Cybern Syst 52(2):749–758

Meng X, Jiang J, Wang H (2021) AGWO: advanced GWO in multi-layer perception optimization. Exp Syst Appl 117:114676

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Mirjalili S, Mirjalili SM, Hatamlou A (2016) Multi-verse optimizer: a nature-inspired algorithm for global optimization. Neural Comput Appl 27(2):495–513

Mohamed AA, Hassan SA, Hemeida AM (2020) Parasitism-Predation algorithm (PPA): a novel approach for feature selection. Ain Shams Eng J 11(2):293–308

Moosavi SHS, Bardsiri VK (2019) Poor and rich optimization algorithm: a new human-based and multi populations algorithm. Eng Appl Artif Intell 86:165–181

Naruei I, Keynia F (2021) A new optimization method based on COOT bird natural life model. Exp Syst Appl 183:115352

Nematollahi AF, Foroughi A, Rahiminejad A (2017) A novel physical based meta-heuristic optimization method known as lightning attachment procedure optimization. Appl Soft Comput 59:596–621

Pao Y-H, Takefuji Y (1992) Functional-link net computing: theory, system architecture, and functionalities. Computer 25(5):76–79

Pao Y-H, Park G-H, Sobajic DJ (1994) Learning and generalization characteristics of the random vector functional-link net. Neurocomputing 6(2):163–180

Salakhutdinov R, Hinton GE (2009) Deep boltzmann machines. Int Conf Artif Intell Stat (AISTATS)

Shabani A, Asgarian B, Salido M (2020) Search and rescue optimization algorithm: a new optimization method for solving constrained engineering optimization problems. Exp Syst Appl 161:113698

Shi Q, Katuwal R, Suganthan PN (2021) Random vector functional link neural network based ensemble deep learning. Patt Recogn 117:107978

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition

Sulaiman MH, Mustaffa Z, Saari MM (2020) Barnacles mating optimizer: a new bio-inspired algorithm for solving engineering optimization problems. Eng Appl Artif Intell 87:103330

Tang X, Zhang N, Zhou J (2017) Hidden-layer visible deep stacking network optimized by PSO for motor imagery EEG recognition. Neurocomputing 234:1–10

Tang H, Dong P, Shi Y (2021) A construction of robust representations for small data sets using broad learning system. IEEE Trans Syst Man Cybern Syst 51(10):6074–6084

Vincent P, Larochelle H, Bengio Y (2008) Extracting and composing robust features with denoising autoencoders. In: Proceedings of the 25th international conference on machine learning (ICML), New York, NY, USA, pp 1096–1103.

Wang D, Li M (2017a) Stochastic configuration networks: fundamentals and algorithms. IEEE Trans Cybern 47(10):3466–3479

Wang D, Li M (2017b) Robust stochastic configuration networks with kernel density estimation for uncertain data regression. Inform Sci 412:210–222

Xu H, Jiang C (2020) Deep belief network-based support vector regression method for traffic flow forecasting. Neural Comput Appl 32(7):2027–2036

Xu M, Han M, Chen CLP (2020) Recurrent broad learning systems for time series prediction. IEEE Trans Cybern 50(4):1405–1417

Xue Y, Dou D, Yang J (2020) Multi-fault diagnosis of rotating machinery based on deep convolution neural network and support vector machine. Measurement 156:107571

Yang Y, Gao Z, Li Y (2021) A complex network-based broad learning system for detecting driver fatigue from EEG signals. IEEE Trans Syst Man Cybern Syst 51(9):5800–5808

Ye H, Li H, Chen CLP (2021) Adaptive deep cascade broad learning system and its application on image denoising. IEEE Trans Cybern 51(9):4450–4463

Yu W, Zhao C (2020) Broad convolutional neural network based industrial process fault diagnosis with incremental learning capability. IEEE Trans Indust Elect 67(6):5081–5091

Zhang C, Ding S (2021) A stochastic configuration network based on chaotic sparrow search algorithm. Knowl Based Syst 220:106924

Zhang T, Wang X, Xu X (2019) GCB-Net: Graph convolutional broad network and its application in emotion recognition. IEEE Trans Affect Comput. https://doi.org/10.1109/TAFFC.2019.2937768

Zhang N, Ding S, Zhang J (2020a) Robust spike-and-slab deep Boltzmann machines for face denoising. Neural Comput Appl 32(7):2815–2827

Zhang Z, Ding S, Sun Y (2020b) A support vector regression model hybridized with chaotic krill herd algorithm and empirical mode decomposition for regression task. Neurocomputing 410:185–201

Zhang T, Gong X, Chen CLP (2021a) BMT-Net: broad multitask transformer network for sentiment analysis. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2021.3050508

Zhang C, Ding S, Zhang J (2021b) Parallel stochastic configuration networks for large-scale data regression. Appl Soft Comput J 103:107143

Zhao W, Zhang Z, Wang L (2020) Manta ray foraging optimization: an effective bio-inspired optimizer for engineering applications. Eng Appl Artif Intell 87:103300

Zhou Q, He X (2019) Broad learning model based on enhanced features learning. IEEE Access 7:42536–42550

Acknowledgements

This work is supported by the National Natural Science Foundations of China (No. 61976216 and No. 61672522).

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, C., Ding, S., Guo, L. et al. Broad learning system based ensemble deep model. Soft Comput 26, 7029–7041 (2022). https://doi.org/10.1007/s00500-022-07004-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-022-07004-z