Abstract

Introduction

The most common way of assessing surgical performance is by expert raters to view a surgical task and rate a trainee’s performance. However, there is huge potential for automated skill assessment and workflow analysis using modern technology. The aim of the present study was to evaluate machine learning (ML) algorithms using the data of a Myo armband as a sensor device for skills level assessment and phase detection in laparoscopic training.

Materials and methods

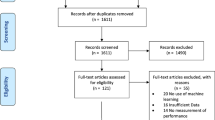

Participants of three experience levels in laparoscopy performed a suturing and knot tying task on silicon models. Experts rated performance using Objective Structured Assessment of Surgical Skills (OSATS). Participants wore Myo armbands (Thalmic Labs™, Ontario, Canada) to record acceleration, angular velocity, orientation, and Euler orientation. ML algorithms (decision forest, neural networks, boosted decision tree) were compared for skill level assessment and phase detection.

Results

28 participants (8 beginner, 10 intermediate, 10 expert) were included, and 99 knots were available for analysis. A neural network regression model had the lowest mean absolute error in predicting OSATS score (3.7 ± 0.6 points, r2 = 0.03 ± 0.81; OSATS min.-max.: 4–37 points). An ensemble of binary-class neural networks yielded the highest accuracy in predicting skill level (beginners: 82.2% correctly identified, intermediate: 3.0%, experts: 79.5%) whereas standard statistical analysis failed to discriminate between skill levels. Phase detection on raw data showed the best results with a multi-class decision jungle (average 16% correctly identified), but improved to 43% average accuracy with two-class boosted decision trees after Dynamic time war** (DTW) application.

Conclusion

Modern machine learning algorithms aid in interpreting complex surgical motion data, even when standard analysis fails. Dynamic time war** offers the potential to process and compare surgical motion data in order to allow automated surgical workflow detection. However, further research is needed to interpret and standardize available data and improve sensor accuracy.

Similar content being viewed by others

References

Delaney CP et al (2003) Case-matched comparison of clinical and financial outcome after laparoscopic or open colorectal surgery. Ann Surg 238(1):67

Reza M et al (2006) Systematic review of laparoscopic versus open surgery for colorectal cancer. Br J Surg 93(8):921–928

Nguyen KT et al (2011) Comparative benefits of laparoscopic vs open hepatic resection: a critical appraisal. Arch Surg 146(3):348–356

Shabanzadeh DM, Sørensen LT (2012) Laparoscopic surgery compared with open surgery decreases surgical site infection in obese patients: a systematic review and meta-analysis. Ann Surg 256(6):934–945

Vassiliou MC et al (2005) A global assessment tool for evaluation of intraoperative laparoscopic skills. Am J Surg 190(1):107–113

Miskovic D et al (2012) Learning curve and case selection in laparoscopic colorectal surgery: systematic review and international multicenter analysis of 4852 cases. Dis Colon Rectum 55(12):1300–1310

Nickel F et al (2016) Sequential learning of psychomotor and visuospatial skills for laparoscopic suturing and knot tying-a randomized controlled trial “The shoebox study” DRKS00008668. Langenbecks Arch Surg 401(6):893–901

Martin J et al (1997) Objective structured assessment of technical skill (OSATS) for surgical residents. Br J Surg 84(2):273–278

Loukas C (2017) Video content analysis of surgical procedures. Surg Endosc 32:553

Ahmadi S-A et al (2006) Recovery of surgical workflow without explicit models. In: International Conference on medical image computing and computer-assisted intervention. Springer, New York

Bardram JE et al (2011) Phase recognition during surgical procedures using embedded and body-worn sensors. IT University of Copenhagen, Copenhagen

Padoy N et al (2007) A boosted segmentation method for surgical workflow analysis. In: International Conference on medical image computing and computer-assisted intervention. Springer, New York

Katic D et al (2016) Bridging the gap between formal and experience-based knowledge for context-aware laparoscopy. Int J Comput Assist Radiol Surg 11(6):881–888

Rosen J et al (2001) Markov modeling of minimally invasive surgery based on tool/tissue interaction and force/torque signatures for evaluating surgical skills. IEEE Trans Biomed Eng 48(5):579–591

Reiley CE et al (2011) Review of methods for objective surgical skill evaluation. Surg Endosc 25(2):356–366

Spangenberg N et al (2017) Method for intra-surgical phase detection by using real-time medical device data. In: IEEE 30th International Symposium on computer-based medical systems

Ganni S et al (2018) A software-based tool for video motion tracking in the surgical skills assessment landscape. Surg Endosc 32(6):2994

Lin P-J, Chen HY (2018) Design and implement of a rehabilitation system with surface electromyography technology. In: 2018 IEEE International Conference on applied system invention (ICASI). IEEE

Ryser F et al (2017) Fully embedded myoelectric control for a wearable robotic hand orthosis. IEEE Int Conf Rehabil Robot 2017:615–621

Sathiyanarayanan M, Raja S (2016) Myo armband for physiotherapy healtchare: a case study using gesture recognition application

Kutafina E et al (2016) Wearable sensors for eLearning of manual tasks: using forearm EMG in hand hygiene training. Sensors (Basel) 16(8):1221

Jimenez DA et al (2016) Human-computer interaction for image guided surgery systems using physiological signals: application to deep brain stimulation surgery. In VII Latin American Congress on Biomedical Engineering CLAIB 2016, Bucaramanga, Santander, Colombia, October 26th–28th, 2017. Springer

Sanchez-Margallo FM et al (2017) Use of natural user interfaces for image navigation during laparoscopic surgery: initial experience. Minim Invasive Ther Allied Technol 26(5):253–261

Romero P et al (2014) Intracorporal suturing—driving license necessary? J Pediatr Surg 49(7):1138–1141

Munz Y et al (2007) Curriculum-based solo virtual reality training for laparoscopic intracorporeal knot tying: objective assessment of the transfer of skill from virtual reality to reality. Am J Surg 193(6):774–783

Kowalewski K-F et al (2016) Development and validation of a sensor- and expert model-based training system for laparoscopic surgery: the iSurgeon. Surg Endosc 31:2155

Chang OH et al (2015) Develo** an objective structured assessment of technical skills for laparoscopic suturing and intracorporeal knot tying. J Surg Educ 73:258

Brown JD et al (2017) Using contact forces and robot arm accelerations to automatically rate surgeon skill at peg transfer. IEEE Trans Biomed Eng 64(9):2263–2275

Sakoe H, Chiba S (1978) Dynamic programming algorithm optimization for spoken word recognition. IEEE Trans ASSP 26(1):43

Wang Q (2013) Dynamic Time War** (DTW). MathWorks file exchange. https://www.mathworks.com/matlabcentral/fileexchange/43156-dynamic-time-war**-dtw

Ahmidi N et al (2010) Surgical task and skill classification from eye tracking and tool motion in minimally invasive surgery. Med Image Comput Comput Assist Interv 13(Pt 3):295–302

Fard MJ et al (2018) Automated robot-assisted surgical skill evaluation: predictive analytics approach. Int J Med Robot 14(1):e1850

Rosen J et al (2001) Objective laparoscopic skills assessments of surgical residents using Hidden Markov Models based on haptic information and tool/tissue interactions. Stud Health Technol Inform 81:417–423

Oropesa I et al (2013) EVA: laparoscopic instrument tracking based on endoscopic video analysis for psychomotor skills assessment. Surg Endosc 27(3):1029–1039

Murdoch TB, Detsky AS (2013) The inevitable application of big data to health care. Jama 309(13):1351–1352

Raghupathi W, Raghupathi V (2014) Big data analytics in healthcare: promise and potential. Health Inf Sci Syst 2(1):3

Kenngott HG et al (2016) Intelligent operating room suite: from passive medical devices to the self-thinking cognitive surgical assistant. Chirurg 87(12):1033–1038

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosure

Felix Nickel reports receiving travel support for conference participation as well as equipment provided for laparoscopic surgery courses by KARL STORZ, Johnson & Johnson, Intuitive, and Medtronic. Karl-Friedrich Kowalewski, Carly R. Garrow, Mona W. Schmidt, Laura Benner and Beat Müller-Stich have no conflicts of interest or financial ties to disclose.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kowalewski, KF., Garrow, C.R., Schmidt, M.W. et al. Sensor-based machine learning for workflow detection and as key to detect expert level in laparoscopic suturing and knot-tying. Surg Endosc 33, 3732–3740 (2019). https://doi.org/10.1007/s00464-019-06667-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-019-06667-4